Using an ESP32 microcontroller with wifi and bluetooth capability, a gyroscope/accelerometer module and keyboards switches, Jurij Podgoršek will be building an experimental low cost motion “glove” controller with a button for each finger.

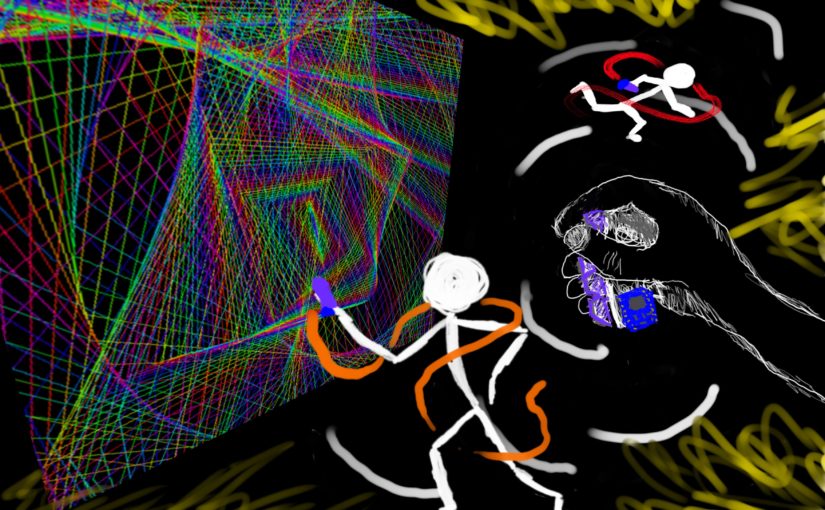

The idea stems from an earlier abstract visualisation project which was intended to visualise music. After building the initial prototype and hooking it up with a touch user interface of sliders that modulate the visuals, the author asked himself – why should I make the program interpret music? We already do that when we listen and (can) react by dancing; using a motion sensor, the dancing can be “amplified” by turning bodily motions into shapes and colours projected on a surface.

Using the motion mitt, the operator of visuals doesn’t have to get locked into a clumsy little touchscreen but can immerse in the experience of sound and video while co-creating it. A workshop will be held to build a number of gloves that can connect in an ad-hoc network, so that group of people could collaborate with them.

The glove(s) will send events via the open sound control protocol, opening the possibility to using using them for audio synthesis/modulation, or maybe even as a general interface.

“What Can a Body Do?”