The OctoSens is a community project formed by a group of music and technology enthusiasts who, under the guidance of Vaclav Peloušek (Bastl Instruments), are combining different perspectives while developing an interface that will enable the simultaneous use of different sensors to synthesise sound and control other devices.

OctoSens

Development of a multi-sensorial synthesizer is a part of Projekt Atol and konS platform AIR programme.

Sensors and music

Musicians are always searching for new ways of creating and modifying sound. The development of new technologies and their accessibility have propelled and enabled use of sensors in sound design. However, the great variety of different sensors and lack of practical interfaces often requires a grasp on advanced technological knowledge or compels the artists to buy expensive specialised equipment; both requirements can hinder the creative process.

We decided to find solutions to this problem which would allow artists to use a great number of different sensors in an easy and intuitive way in order to modify sound. Under the guidance of Vaclav Peloušek (Bastl instruments) we have collectively designed a device and named it OctoSens.

What is OctoSens?

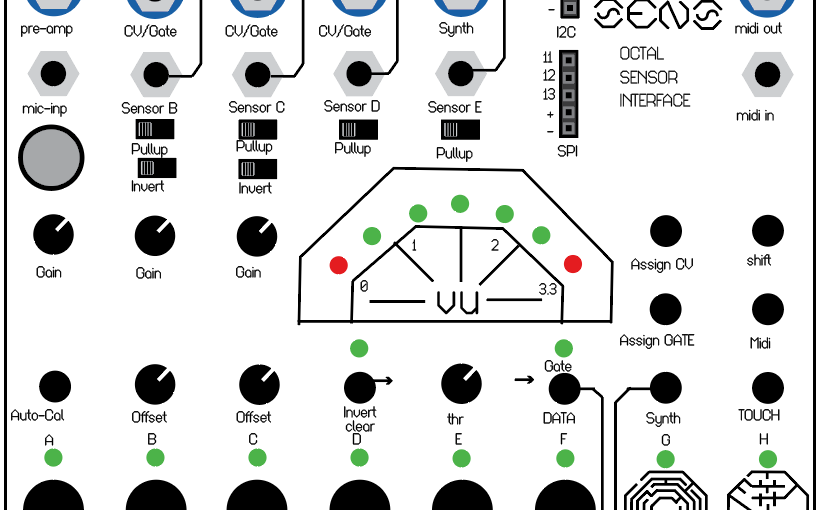

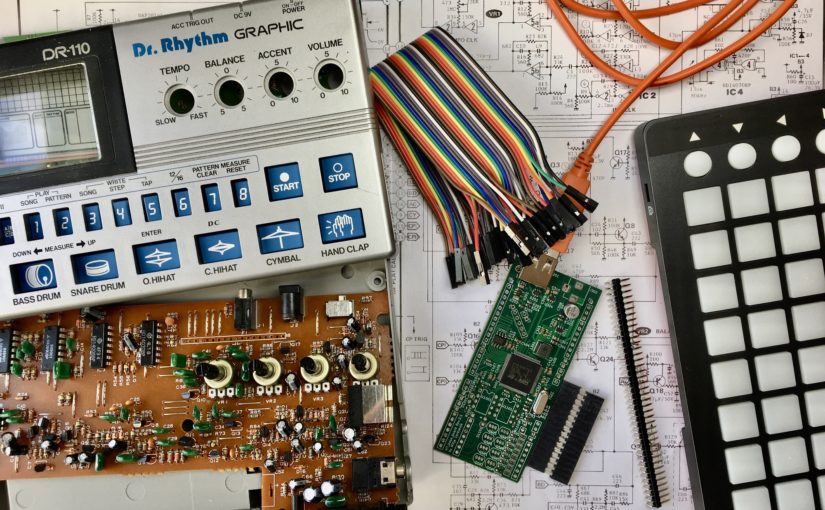

Having 4 analog and two digital inputs for external sensors, as well as two touchpads, the OctoSens offers 8 different ways of altering the desired parameters of sound. A built-in microphone, two tactile sensors and a digital synthesizer integrated in the microcontroller enable us to use OctoSens individually without external sensors and other instruments. We can simply connect the OctoSens to a speaker and start creating. We can use the information detected by the sensors to control volume, pitch, filter frequency and other parameters of the integrated digital synthesizer. Artists who already have other instruments, synthesizers and effects could use the OctoSens to connect to their existing gear and multiply its functionality: OctoSens can output different Cv/gate signals and create MIDI information, which can be used to control multiple external devices simultaneously. OctoSens will conform to Eurorack format so that synth-enthusiasts could incorporate it into their Eurorack setup, however, an individual enclosure for those who prefer to use it as a stand-alone device, will be available as well. It will be compatible with the popular microcontrollers Arduino micro and Teensy 3.2 which means that it will be accessible to a wide circle of DIY enthusiasts to further increase its functionality with their own code.

Practical example of the use of OctoSens

So, would you like to adjust the tempo of the song to fit your heartbeat, adapt the volume according to light and control the pitch with the movement of your body? All you have to do, is connect a heart-beat sensor and a light sensor to the analog inputs of the OctoSens and a gyroscope to one of the digital inputs. By pressing the multifunction buttons, we can map the connected sensors to the desired parameters of the integrated synth and use the rotary knobs to calibrate the sensors to a level, ideal for modulating the sound in a musical way.

The objective of the OctoSens project

OctoSens will be an innovative and a competitive product on the quickly evolving market of electronic instruments. At the same time, it will exist in the form of a DIY workshop that will enable its participants to learn exactly how the sensors work and become more knowledgeable on sound synthesis as well as the basics of electronics.

The goal of this project is not solely the process of product development and sale, but it is foremost an effort of creating a community that brings together different generations, providing an interdisciplinary environment that offers an invaluable exchange of knowledge between professional engineers, artists, students of different fields and audio-electronics enthusiasts.